Last Tuesday, OpenAI made a big announcement that shook the world of artificial intelligence: the release of GPT-4, the company’s most advanced LLM (large language model) to date.

The launch was accompanied by a flurry of publications highlighting the most striking use cases or selling updated prompt packages to empower users’ lives. But beyond the buzz, what really changed? What is under the hood that will make companies and users solve new problems or more easily?

In this post, our goal is to deepen the discussion about the GPT-4 launch, bringing more details and information from reliable sources, such as the technical report (https://cdn.openai.com/papers/gpt-4.pdf), the research blog (https://openai.com/research/gpt-4), and the product blog (https://openai.com/product/gpt-4). We want to provide a clearer view of what really changed with the arrival of GPT-4 and how it can impact the world of artificial intelligence and its users.

The highlight is in multimodal

What stands out most in this release is that GPT-4 is a multimodal model, meaning that it is capable of processing information in different formats, such as text and image. This means that it can understand and interact with the world more similarly to ours. For example, it is now possible to ask it to explain the context of a painting or suggest a recipe based on a photo of your fridge as illustrated below.

By allowing the model to process information in different formats, such as text and image, it gets closer to our way of perceiving and interacting with the world, allowing better understanding of the nuances and ambiguities present in different contexts and the performance of more complex tasks.

This opens up a new world of possibilities for human-machine interaction and promises to bring significant benefits to companies and users who want to solve problems more easily and efficiently.

Although image input is not yet available in the public version of ChatGPT or through GPT-4 APIs, OpenAI is already working in partnership with Be My Eyes on a “Visual Assistant” that may make this function available soon.

But how does it perform in terms of text production?

Even without image input, GPT-4’s performance in text production tasks is superior to its predecessor, GPT-3.5. This is due to the fact that GPT-4 has 100 times more parameters than GPT-3.5, which has brought significant quality gains.

In addition, the GPT-4 API can now accept up to 32k tokens, compared to the 4k tokens of the previous version. This means that some applications that were impossible with GPT-3.5 are now trivial with GPT-4. However, it is important to note that GPT-4 still produces false facts and can hallucinate in some situations, which is a limitation for applications that require completely correct answers. In this sense, fine-tuning for specific scenarios and problems can be an important strategy to reduce wrong answers and improve the model’s accuracy.

Although it is difficult to perceive significant differences in text production in routine and open tasks, such as writing a blog post, GPT-4 shows its true potential in complex scenarios. According to OpenAI itself, “the difference becomes clear when the task complexity reaches a sufficient threshold – GPT-4 is more reliable, creative, and capable of handling much more nuanced instructions than GPT-3.5.” In other words, GPT-4 stands out in tasks that require deeper and more specific knowledge, such as exams and tests that demand a large baggage of content.

GPT-4’s performance was evaluated through tests carried out in exams known to require humans to have a large amount of specific content, such as the SAT (the American college entrance exam) and the American equivalent of the OAB exam. The results showed that GPT-4 outperformed its predecessor, GPT-3.5, proving that the 100x larger parameter volume brought significant quality gains. In addition, the GPT-4 API can now accept up to 32k tokens, compared to the 4k tokens of the previous version, which makes it possible to perform applications that were previously impossible with GPT-3.5, such as processing an entire book in one or a few passes.

It is important to remember that, although GPT-4 is more advanced than its predecessors, it is still not perfect and there is room for improvement in its reliability and ability to produce correct answers. To overcome this problem, fine-tuning can be an important option for specific scenarios and problems.

Availability for use

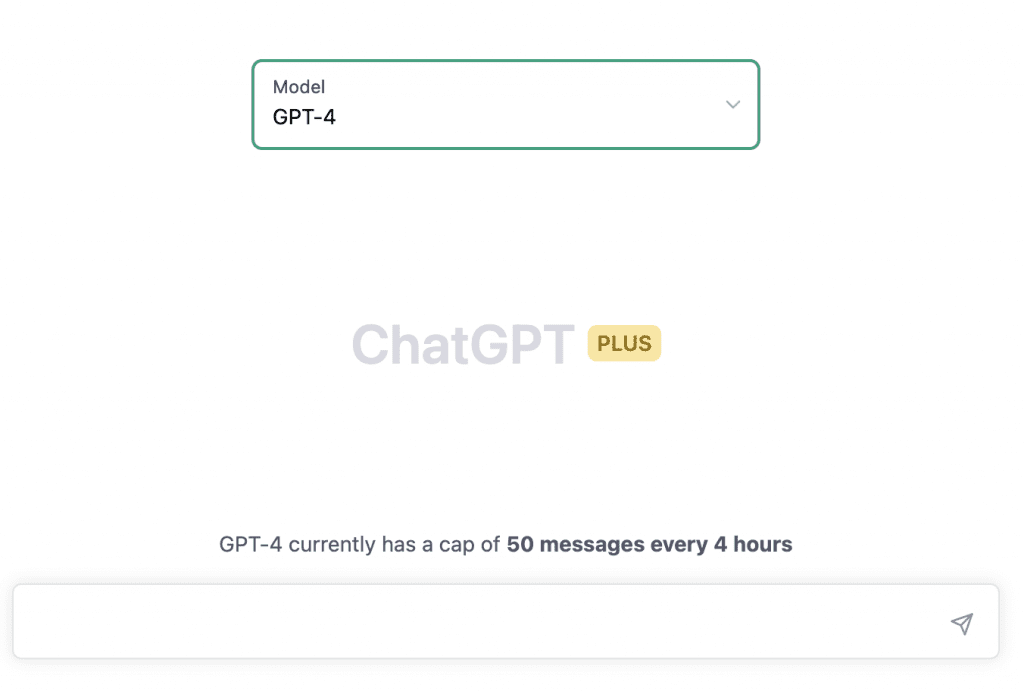

ChatGPT Plus users now have access to GPT-4 on the chat.openai.com platform, with a defined usage limit. OpenAI will adjust the exact usage limit depending on demand and system performance in practice, but severe capacity restrictions are expected (although the company should increase and optimize capacity over the next few months). It is important to note that, at the time this post was written, the officially announced usage limit of GPT-4 was 100 messages every 4 hours, but when accessing ChatGPT it warned that the limit was 50, as shown in the image below.

To try out GPT-4 on the platform, it is necessary to be a Plus user and pay a monthly subscription of $20. By selecting the GPT-4 option in the models tab, users can start using it. OpenAI also plans to make a limited amount of free GPT-4 queries available soon so that users who do not have a subscription can try it out.

AUTHOR Gustavo Reis COO, Hop AI

NEED CHATGPT FOR YOUR BUSINESS? WE CAN HELP.

Solutions with the ChatGPT API or other OpenAI models are available through Hop AI. Do you have an idea